Part 5: The Debugging Gauntlet – When AI Assistants (and Humans) Make Mistakes

This is the most important part of our story. No project, especially one involving new technologies, works perfectly on the first try. The process of debugging—of hitting a wall, diagnosing the problem, and finding a solution—is where the deepest learning happens. This section is a journal of the bugs we encountered and the breakthroughs we achieved.

A Collaborative Approach to Debugging

Throughout this process, I treated my AI assistants not as infallible oracles but as collaborative partners. While Gemini was my primary tool, there were moments we hit a loop, with the AI providing repetitive, unhelpful suggestions. In these cases, turning to a different model was key.

I used Claude Sonnet to re-explain the problem from a different angle and GitHub Copilot to analyze specific code snippets.

This multi-tool approach was invaluable for getting “unstuck” and highlights a crucial skill in modern development: knowing which tool to use for which problem.

Bug #1: PERMISSION_DENIED

Our very first deployment failed with a permissions error. The logs were clear: the Cloud Build service account didn’t have the authority to create a new Cloud Run service.

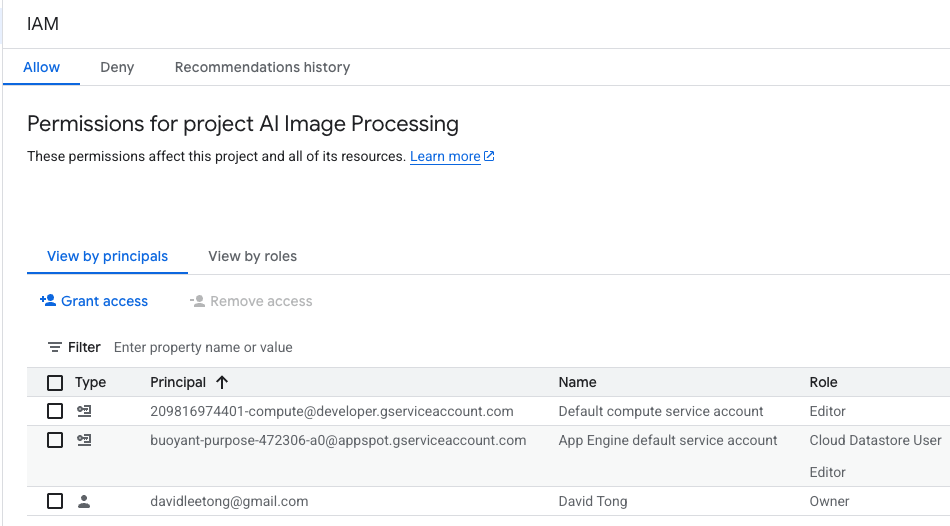

The Fix: This was a lesson in GCP’s security model (IAM). We had to go into the IAM panel and explicitly grant the Cloud Build service account the Cloud Run Admin and Service Account User roles.

Bug #2: CORS (Failed to Fetch)

With the backend deployed, our local index.html file couldn’t connect, showing a CORS error in the browser console.

The Fix: A classic web development issue. The AI explained that for security, browsers block requests between different domains. The solution was to add the Flask-Cors library to our backend, which explicitly tells the server to accept requests from our local file.

Bug #3: ModuleNotFoundError

The application was crashing instantly on startup. The Cloud Run logs showed a clear error: ModuleNotFoundError: No module named 'flask'.

The Fix: This was a humbling typo. After much frustration, we discovered the dependencies file was incorrectly named requirements.tx instead of requirements.txt. A single missing “t” was preventing any of our libraries from being installed.

Bug #4: WORKER TIMEOUT

Even with the code fixed, the service was failing to start, with the logs showing a “worker timeout” and suggesting an out-of-memory issue.

The Fix: The AI diagnosed this as a resource problem. The default memory allocated to a Cloud Run instance was not enough for our application (which loads several libraries) to start up in time. The solution was to redeploy with an increased memory allocation using the --memory=1Gi flag.

Bug #5: Bucket does not exist

Finally, the application was running, but it crashed mid-process. The logs showed the final error: “The specified bucket does not exist.”

The Fix: The last piece of the puzzle was a simple configuration error. The bucket name hardcoded in the main.py file was still a placeholder and didn’t match the actual name of our Cloud Storage bucket.

With this final bug squashed, the application worked! Frankly, dealing with bugs is something that I don’t enjoy and can get quite frustrating. Prior to having immediate AI support, knowing where to search, what to ask, and having to wait for a response from others online dissuaded me from even trying to code.

In the final part of our series, we’ll put the finished product to the test, add our planned feature enhancements, and reflect on the key lessons from this entire journey.

0 Comments